When OpenAI was founded in 2015, the company set out to “ensure that artificial intelligence benefit(s) all of humanity.” Their latest breakthrough is an artificial intelligence (AI) chatbot named ChatGPT. GPT stands for Generative Pre-trained Transformer, which denotes that the chatbot operates a language model relying on deep learning. The chatbot operates through a browser. The premise of the AI chatbot is for a user to interface with the bot by typing in a question or presenting some information in the command line. ChatGPT then interacts with the user in a conversational way. It can also “ask follow-up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests.” (OpenAI, 2023)

The potential of the technology’s applications since its release in December of 2022 is not fully realized or understood; however, the limits of the chatbot are being tested daily by users across many different fields of study. For example, practitioners of computer science and computer programming are reporting mixed results when the AI attempts to write code in various programming languages (HTML, JavaScript, etc.). The chatbot can diagnose common issues in small code segments, but when faced with the challenge of writing new, original lines, the production is filled with syntax errors.

This is just one example of how practitioners in other fields have tried to leverage this powerful technology to solve complex business problems. But what about security? How can a security professional leverage this technology? And are there any dangers?

Colleagues from ASIS International, a security organization I am a member of, have already begun to limit-test this technology. ChatGPT has been used to produce work documents, such as post orders for security guards, emergency evacuation plans, and policy documents, by feeding the chatbot informative requests.

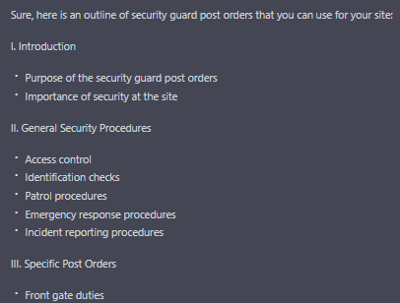

Using the free version, I decided to put ChatGPT to the test. Being a former federal police officer, I instructed the chatbot, “I need to create an outline of security guard post orders for my site.” The response from ChatGPT was a basic outline of security post orders with nine headings, each with three subheadings generated in less than a few seconds! I noticed that the outline did not include a section on shift change. A proper set of security post orders should specify the pass-down procedures from the outbound shift to the incoming shift, as many security posts operate 24/7. I replied back to ChatGPT, “My outline will need a section on shift change.” The response was an immediate generation of the same outline, this time with ten headings. The new heading was inserted after section eight of the original outline.

Using the free version, I decided to put ChatGPT to the test. Being a former federal police officer, I instructed the chatbot, “I need to create an outline of security guard post orders for my site.” The response from ChatGPT was a basic outline of security post orders with nine headings, each with three subheadings generated in less than a few seconds! I noticed that the outline did not include a section on shift change. A proper set of security post orders should specify the pass-down procedures from the outbound shift to the incoming shift, as many security posts operate 24/7. I replied back to ChatGPT, “My outline will need a section on shift change.” The response was an immediate generation of the same outline, this time with ten headings. The new heading was inserted after section eight of the original outline.

I found this experience to be exceptional. ChatGPT is, indeed, quite powerful. My mind was instantly filled with limit-testing possibilities and even some ideas for how I could use this in my own daily routines; however, there are some drawbacks. In many ways, ChatGPT could be viewed as simply an enhanced search engine. It will communicate with you on many topics, but similarly to Apple’s Siri, it will avoid questions or statements that are philosophical or subjective in nature.

Moreover, some have noted that ChatGPT will sometimes import responses that contain potentially uncited information. My colleagues on the ASIS Community Forums have expressed their concerns that if a practitioner relies too heavily on ChatGPT for generating content to solve client business problems, that practitioner could run into plagiarism issues, as many of the imported responses from ChatGPT include surface-level data easily found on Wikipedia.

OpenAI has established usage policies, including a lengthy list of “disallowed” uses. Critical to note for the security field is the policy noting the use of ChatGPT for “High-risk government decision making: Including but not limited to: Law enforcement and criminal justice…” is prohibited. If a security practitioner’s portfolio includes government or law enforcement, asking ChatGPT and using the default response(s) is not the way to support your client.

The era of AI in business has arrived. But user beware: There is risk in using a tool without understanding how it works. Even with the best intentions, a lack of understanding about an AI tool's proper uses can cause unwanted or even harmful results. Similarly, AI tools are no substitute for proper expertise in certain fields, such as security. If used appropriately, ChatGPT is a powerful tool that can offer new possibilities for balancing efficiency, complex business problems, and client requests. As AI technology becomes more prominent across business sectors, security practitioners must remain vigilant in understanding and addressing the risks if and when they choose to use this tool.

About the Author

Lance Carlisle

Security Specialist, Senior Associate at Anser Advisory

Lance Carlisle is a Senior Security Consulting Specialist passionate about utilizing data analytics to inform security solutions. He holds an MS in Criminal Justice with a specialty in data analytics and a BS in Criminology, Law, and Society with a minor in intelligence analysis, both of which he obtained from George Mason University. Prior to his current position, Lance served as a federal law enforcement officer for four years, developing a deep understanding of crime data analytics, policy and post orders, case law, and investigations. Lance has since applied his expertise to private security, specializing in security computer software. He is proficient in C-Cure 9000 and Lenel command software, among others. Lance is also a licensed private investigator in Virginia and a member of ASIS International. His commitment to staying at the forefront of industry developments is unwavering, as he strives to continuously improve security solutions for clients across industries.

Lance Carlisle is a Senior Security Consulting Specialist passionate about utilizing data analytics to inform security solutions. He holds an MS in Criminal Justice with a specialty in data analytics and a BS in Criminology, Law, and Society with a minor in intelligence analysis, both of which he obtained from George Mason University. Prior to his current position, Lance served as a federal law enforcement officer for four years, developing a deep understanding of crime data analytics, policy and post orders, case law, and investigations. Lance has since applied his expertise to private security, specializing in security computer software. He is proficient in C-Cure 9000 and Lenel command software, among others. Lance is also a licensed private investigator in Virginia and a member of ASIS International. His commitment to staying at the forefront of industry developments is unwavering, as he strives to continuously improve security solutions for clients across industries.